What is speech service in Azure?

Azure Speech Service is a cloud-based AI solution within Microsoft’s Azure Cognitive Services that enables developers to integrate advanced speech capabilities into applications. It supports speech-to-text conversion, text-to-speech synthesis, real-time translation, and speaker recognition. By leveraging deep learning models, the service provides highly accurate and natural-sounding interactions, making it ideal for voice assistants, transcription services, and accessibility tools. Its scalability, security, and extensive language support make it a preferred choice for enterprises and developers worldwide.

Speech-to-Text (STT) Capabilities

Azure’s Speech-to-Text feature converts spoken language into written text with high precision. It supports real-time transcription for live audio streams, such as meetings or customer calls, as well as batch processing for pre-recorded files. The service includes pre-trained models for over 100 languages and dialects, ensuring broad accessibility. Additionally, developers can fine-tune recognition accuracy using Custom Speech, which adapts to industry-specific terminology, accents, and background noise conditions.

Text-to-Speech (TTS) Technology

The Text-to-Speech service transforms written text into lifelike spoken audio using neural networks. Azure offers a variety of pre-built voices across multiple languages, along with the ability to create custom-branded voices for unique applications. Developers can use Speech Synthesis Markup Language (SSML) to adjust pronunciation, intonation, and pauses, enhancing the natural flow of synthesized speech. This feature is widely used in virtual assistants, audiobooks, and accessibility tools like screen readers.

Real-Time Speech Translation

Azure Speech Service enables seamless translation of spoken content between languages in real time. This functionality is particularly useful for global businesses, multilingual customer support, and live event captioning. The process involves three steps: converting speech to text, translating the text, and optionally converting it back to speech in the target language. With support for numerous language pairs, the service facilitates smooth cross-lingual communication.

Speaker Recognition Features

The Speaker Recognition component provides two key functionalities: verification and identification. Speaker verification authenticates users based on their unique voiceprints, enabling secure voice-based logins. Speaker identification distinguishes between multiple speakers in a conversation, which is useful for meeting transcriptions and call center analytics. Both features rely on AI models that analyze vocal characteristics to ensure accuracy.

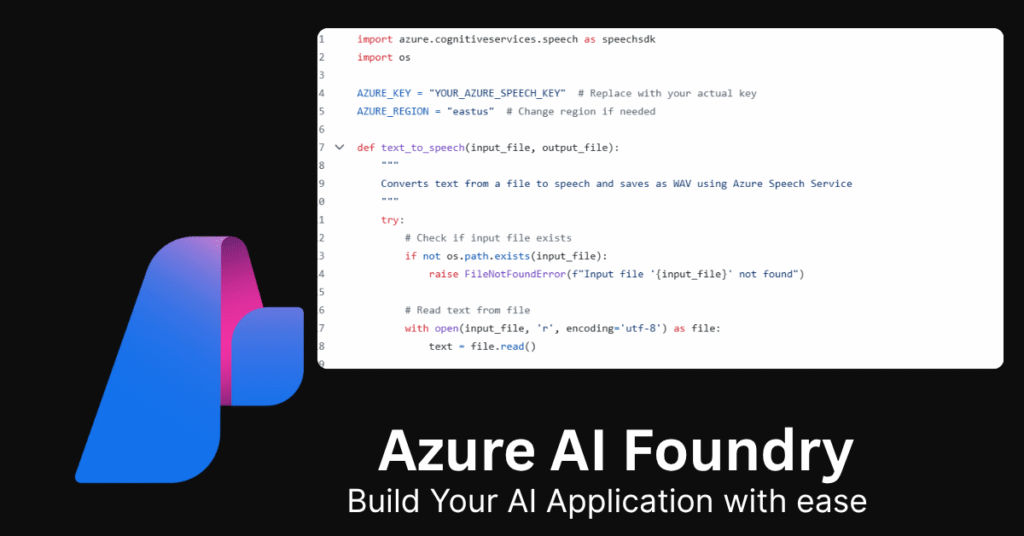

Integration and Deployment Options

Azure Speech Service offers multiple integration methods, including REST APIs for server-side processing and SDKs for popular programming languages like Python, C#, and JavaScript. Developers can test and customize models using the Speech Studio web portal. For scenarios requiring offline processing or low latency, the service supports containerized deployments, allowing on-premises or edge-based execution.

Common Use Cases

The service is widely adopted across industries. In customer service, it powers voice bots and call analytics. In healthcare, it assists with clinical documentation through voice dictation. Media companies use it for automated subtitling and dubbing, while smart device manufacturers integrate it for voice-controlled interactions. Accessibility applications, such as real-time captioning for the hearing impaired, further demonstrate its versatility.

Best Practices for Implementation

To maximize performance, developers should use Custom Speech models for domain-specific terminology and preprocess audio to reduce background noise. Cost optimization can be achieved through batch processing and response caching. For real-time applications, deploying the service in Azure regions closest to users minimizes latency. Security measures, such as data encryption and compliance with regulations like GDPR, are essential for protecting sensitive information.

Challenges and Considerations

Despite its advanced capabilities, the service faces challenges like accuracy degradation in noisy environments and the need for extensive datasets when training custom voices. Real-time translation may also introduce slight delays, which can affect user experience. Developers must weigh these factors when designing voice-enabled applications.

FAQs on Azure Speech Service

1. What is Speech Service in Azure?

Azure Speech Service is a cloud-based AI solution within Microsoft Azure that enables developers to integrate speech recognition, text-to-speech conversion, speech translation, and speaker recognition into applications. It uses advanced deep learning models to provide accurate and natural-sounding interactions, making it ideal for voice assistants, transcription services, and accessibility tools.

2. What is Azure Language Services?

Azure Language Services is a collection of AI-powered natural language processing (NLP) tools, including text analytics, translation, and conversational AI. While Azure Speech Service focuses on speech-related tasks, Language Services handles text-based language understanding, sentiment analysis, and language detection. Together, they enable comprehensive voice and text-based AI solutions.

3. What Languages Are Supported by Azure Speech?

Azure Speech Service supports over 130 languages and dialects for speech-to-text and text-to-speech, including widely spoken languages like English, Spanish, Mandarin, and Hindi, as well as regional dialects. Real-time translation is available for multiple language pairs, ensuring global accessibility.

4. What is Azure Speech Transcribe?

Azure Speech Transcribe is a feature within Azure Speech Service that converts spoken audio into written text. It supports real-time transcription for live conversations (e.g., meetings, calls) and batch transcription for pre-recorded audio files. Businesses use it for automated captioning, meeting notes, and voice analytics.

5. How Does Azure Speech-to-Text Work?

Azure Speech-to-Text (STT) uses deep neural networks (DNNs) to analyze audio input, convert speech into text, and refine accuracy using language models. It supports custom speech models for domain-specific terms (e.g., medical, legal jargon) and adapts to different accents and speaking styles.

6. Can Azure Speech Service Recognize Multiple Speakers?

Yes, Azure Speech Service supports speaker diarization, which identifies and separates different speakers in a conversation. This is useful for meeting transcriptions, call center analytics, and interview recordings.

7. What is Neural Text-to-Speech (Neural TTS) in Azure?

Neural TTS is an advanced text-to-speech feature that uses deep learning to generate human-like, natural-sounding speech. It offers customizable voices, including brand-specific custom voices, and supports Speech Synthesis Markup Language (SSML) for fine-tuning pronunciation and intonation.

8. How Secure is Azure Speech Service?

Azure Speech Service follows Microsoft’s security and compliance standards, including GDPR, HIPAA, and ISO certifications. Data is encrypted in transit and at rest, and enterprises can deploy it in private cloud or on-premises environments for additional security.

9. Can Azure Speech Service Work Offline?

Yes, Azure Speech Service can be deployed offline using containers, allowing businesses to process speech data locally without cloud dependency. This is useful for edge computing, IoT devices, and environments with strict data residency requirements.

10. What Are the Pricing Options for Azure Speech Service?

Azure Speech Service offers pay-as-you-go pricing with free tier limits. Costs depend on usage (e.g., per hour of transcription or per million characters for TTS). Custom speech and voice models may incur additional training and hosting fees.

Conclusion

Azure Speech Service is a powerful tool for integrating speech recognition, synthesis, and translation into applications. Its AI-driven models, customization options, and global language support make it a robust solution for businesses and developers. By following best practices, organizations can harness its full potential to enhance user interactions, improve accessibility, and streamline operations. As Microsoft continues to advance its AI technologies, the service is expected to become even more sophisticated, further bridging the gap between human and machine communication.

Cybersecurity Architect | Cloud-Native Defense | AI/ML Security | DevSecOps

With over 23 years of experience in cybersecurity, I specialize in building resilient, zero-trust digital ecosystems across multi-cloud (AWS, Azure, GCP) and Kubernetes (EKS, AKS, GKE) environments. My journey began in network security—firewalls, IDS/IPS—and expanded into Linux/Windows hardening, IAM, and DevSecOps automation using Terraform, GitLab CI/CD, and policy-as-code tools like OPA and Checkov.

Today, my focus is on securing AI/ML adoption through MLSecOps, protecting models from adversarial attacks with tools like Robust Intelligence and Microsoft Counterfit. I integrate AISecOps for threat detection (Darktrace, Microsoft Security Copilot) and automate incident response with forensics-driven workflows (Elastic SIEM, TheHive).

Whether it’s hardening cloud-native stacks, embedding security into CI/CD pipelines, or safeguarding AI systems, I bridge the gap between security and innovation—ensuring defense scales with speed.

Let’s connect and discuss the future of secure, intelligent infrastructure.