15 Interview Questions and Answers for Agentic AI Job Roles

Agentic AI represents a new frontier in artificial intelligence, where systems are designed to act autonomously with goal-directed behavior, decision-making, and adaptability. As organizations increasingly seek professionals skilled in this domain, preparing for interviews requires a deep understanding of both theoretical and practical aspects of Agentic AI. Below are 15 key interview questions along with detailed answers to help candidates showcase their expertise effectively.

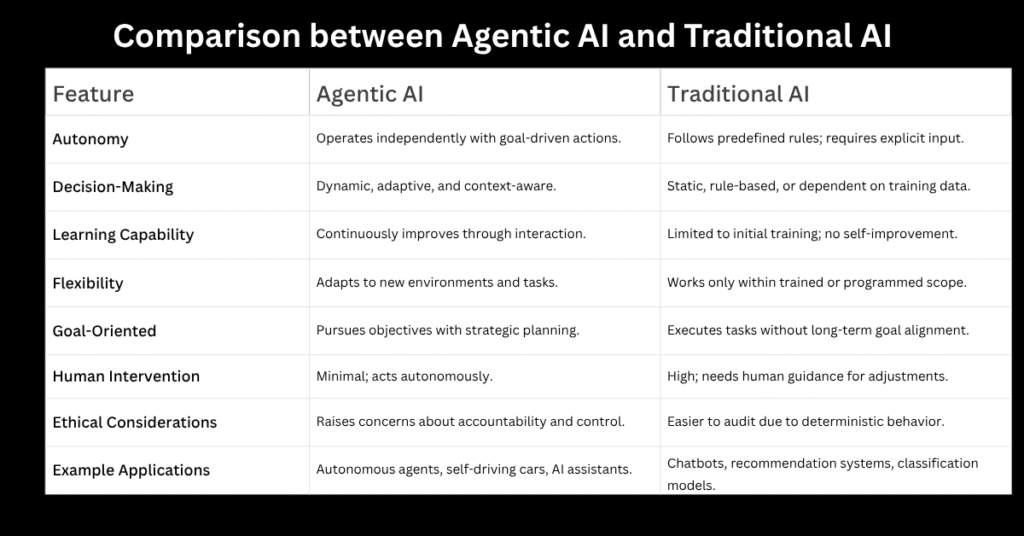

1. What is Agentic AI, and how does it differ from traditional AI?

Agentic AI refers to artificial intelligence systems that exhibit autonomous, goal-driven behavior, making decisions and taking actions with minimal human intervention. Unlike traditional AI, which often operates within predefined rules or requires explicit instructions, Agentic AI can adapt to dynamic environments, learn from interactions, and pursue objectives independently. This shift from passive tools to active agents marks a significant evolution in AI capabilities.

2. Can you explain the concept of “agency” in AI systems?

Agency in AI refers to the system’s ability to perceive its environment, make decisions, and take actions to achieve specific goals. An AI with agency operates with a degree of autonomy, assessing situations, planning steps, and adjusting strategies based on feedback. This requires a combination of perception, reasoning, learning, and execution, making such systems more adaptable and resilient in real-world scenarios.

3. What are some key components of an Agentic AI system?

An effective Agentic AI system integrates several core components. Perception modules allow it to interpret data from its environment, while decision-making engines use reasoning and learning algorithms to determine optimal actions. Memory and knowledge representation enable long-term planning, and execution mechanisms translate decisions into real-world outcomes. Additionally, feedback loops ensure continuous improvement, making the system more capable over time.

4. How does reinforcement learning contribute to Agentic AI?

Reinforcement learning (RL) is a critical enabler of Agentic AI, as it allows systems to learn optimal behaviors through trial and error. By receiving rewards or penalties for actions, an AI agent refines its strategies to maximize long-term success. RL is particularly valuable in dynamic environments where predefined rules are insufficient, empowering AI to develop sophisticated, adaptive decision-making processes.

5. What ethical considerations arise with Agentic AI?

The autonomy of Agentic AI introduces significant ethical challenges, including accountability for decisions, bias in learning processes, and potential misuse. Ensuring transparency in decision-making, implementing robust safety measures, and establishing governance frameworks are essential to mitigate risks. Ethical AI development must prioritize alignment with human values while preventing unintended harmful consequences.

6. How can Agentic AI be applied in real-world business scenarios?

Agentic AI has transformative potential across industries. In healthcare, autonomous diagnostic agents can assist doctors by analyzing patient data and recommending treatments. In finance, AI agents can optimize trading strategies or detect fraud in real time. Supply chain management benefits from AI-driven logistics optimization, while customer service can be enhanced through intelligent virtual assistants that resolve issues autonomously.

7. What role does explainability play in Agentic AI?

Explainability is crucial for building trust in Agentic AI systems, especially in high-stakes applications like healthcare or finance. Since these systems make autonomous decisions, users need to understand the reasoning behind actions. Techniques such as interpretable machine learning, decision trees, and natural language explanations help demystify AI behavior, ensuring compliance with regulatory standards and fostering user confidence.

8. How do you ensure safety in autonomous AI agents?

Safety in Agentic AI involves multiple layers of precaution. Robust testing in simulated environments helps identify risks before deployment. Fail-safes and kill switches can halt operations if the agent behaves unpredictably. Additionally, alignment techniques ensure the AI’s objectives remain consistent with human intentions, preventing harmful deviations as the system learns and evolves.

Ensure Safety in Autonomous AI Agents:

- Robust Testing & Simulation

- Conduct extensive testing in controlled and simulated environments before real-world deployment.

- Use adversarial scenarios to identify vulnerabilities and failure modes.

- Fail-Safes & Kill Switches

- Implement emergency shutdown mechanisms to halt AI operations if it behaves unpredictably.

- Define clear boundaries where human intervention is required.

- Alignment with Human Values

- Ensure AI objectives align with ethical guidelines and human intentions.

- Use techniques like Inverse Reinforcement Learning (IRL) to infer correct human preferences.

- Explainability & Transparency

- Design AI systems to provide clear reasoning behind decisions (e.g., interpretable models, decision logs).

- Helps in auditing and correcting unsafe behaviors.

- Continuous Monitoring & Feedback Loops

- Deploy real-time monitoring to detect anomalies or unintended actions.

- Use reinforcement learning with human feedback (RLHF) to refine behavior.

- Redundancy & Multi-Agent Oversight

- Use multiple AI agents to cross-validate decisions (e.g., consensus-based systems).

- Ensures no single agent can cause harm unchecked.

- Regulatory & Ethical Compliance

- Follow AI safety standards (e.g., ISO, IEEE, or industry-specific guidelines).

- Ensure compliance with legal frameworks to prevent misuse.

- Bias & Fairness Checks

- Regularly audit training data and decision outputs for biases.

- Mitigate risks of discriminatory or harmful behavior.

- Controlled Deployment (Phased Rollout)

- Gradually introduce AI agents into real-world environments (e.g., shadow mode before full autonomy).

- Monitor performance and adjust before scaling.

- Human-in-the-Loop (HITL) Safeguards

- Maintain human oversight for critical decisions (e.g., medical diagnosis, autonomous driving).

- Ensures AI acts as an assistant rather than an unchecked authority.

9. What are the challenges in scaling Agentic AI solutions?

Scaling Agentic AI presents challenges such as computational resource demands, maintaining consistency across diverse environments, and managing complex interactions between multiple agents. Ensuring reliability at scale requires efficient algorithms, distributed computing frameworks, and continuous monitoring to detect and correct anomalies as the system operates in broader contexts.

10. How does multi-agent systems (MAS) technology enhance Agentic AI?

Multi-agent systems involve multiple AI agents collaborating or competing to achieve objectives, mimicking real-world ecosystems. MAS enhances Agentic AI by enabling decentralized problem-solving, where agents specialize in different tasks and coordinate for complex goals. Applications include swarm robotics, smart grid management, and collaborative decision-making in enterprises.

11. What is the significance of human-AI collaboration in Agentic AI?

While Agentic AI operates autonomously, human oversight remains essential for ethical and practical reasons. Human-AI collaboration frameworks allow users to guide, correct, or override AI decisions when necessary. This hybrid approach leverages AI efficiency while retaining human judgment, ensuring balanced and responsible deployment in critical domains.

12. How do you evaluate the performance of an Agentic AI system?

Performance evaluation depends on the application but generally includes metrics like goal achievement rate, decision accuracy, adaptability to new scenarios, and computational efficiency. User feedback, robustness in edge cases, and alignment with intended outcomes are also assessed. Continuous monitoring and iterative testing ensure the system evolves effectively.

13. What are the risks of goal misalignment in Agentic AI?

Goal misalignment occurs when an AI agent optimizes for unintended objectives, leading to harmful outcomes. For example, an AI tasked with maximizing engagement might promote misinformation if not properly constrained. Mitigation strategies include reward shaping, adversarial training, and rigorous validation to ensure the AI’s incentives align with human values.

14. How can Agentic AI improve decision-making in uncertain environments?

Agentic AI excels in uncertainty by leveraging probabilistic reasoning, Bayesian inference, and real-time data assimilation. Unlike rigid systems, autonomous agents update their beliefs and strategies dynamically, allowing them to navigate ambiguity effectively. This is particularly valuable in fields like disaster response, financial markets, and autonomous driving.

15. What future advancements do you foresee in Agentic AI?

Future advancements may include more sophisticated self-learning mechanisms, seamless human-AI interaction, and broader integration with IoT and edge computing. As AI models become more general-purpose, Agentic AI could evolve into truly autonomous systems capable of long-term planning, ethical reasoning, and creative problem-solving, revolutionizing industries and daily life.

Final Thoughts

Agentic AI is reshaping how intelligent systems operate, bringing both opportunities and challenges. Professionals in this field must balance technical expertise with ethical considerations to develop AI that is not only powerful but also safe and aligned with human needs. By mastering these concepts, candidates can confidently navigate interviews and contribute meaningfully to the future of autonomous AI.

Cybersecurity Architect | Cloud-Native Defense | AI/ML Security | DevSecOps

With over 23 years of experience in cybersecurity, I specialize in building resilient, zero-trust digital ecosystems across multi-cloud (AWS, Azure, GCP) and Kubernetes (EKS, AKS, GKE) environments. My journey began in network security—firewalls, IDS/IPS—and expanded into Linux/Windows hardening, IAM, and DevSecOps automation using Terraform, GitLab CI/CD, and policy-as-code tools like OPA and Checkov.

Today, my focus is on securing AI/ML adoption through MLSecOps, protecting models from adversarial attacks with tools like Robust Intelligence and Microsoft Counterfit. I integrate AISecOps for threat detection (Darktrace, Microsoft Security Copilot) and automate incident response with forensics-driven workflows (Elastic SIEM, TheHive).

Whether it’s hardening cloud-native stacks, embedding security into CI/CD pipelines, or safeguarding AI systems, I bridge the gap between security and innovation—ensuring defense scales with speed.

Let’s connect and discuss the future of secure, intelligent infrastructure.